A commercially available AI model was on par with radiologists when detecting abnormalities on chest x-rays, and eliminated a gap in accuracy between radiologists and nonradiologist physicians, according to a study published October 24 in Scientific Reports.

Taken together, the findings suggest that the AI system -- Imagen Technologies' Chest-CAD -- can support different physician specialties in accurate chest x-ray interpretation, noted Pamela Anderson, director of data and insights at Imagen and colleagues.

"Nonradiologist physicians [emergency medicine, family medicine, and internal medicine] are often the first to evaluate patients in many care settings and routinely interpret chest x-rays when a radiologist is not readily available," they wrote.

Chest-CAD is a deep-learning algorithm approved by the U.S. Food and Drug Administration in 2021. The software identifies suspicious regions of interest (ROIs) on chest x-rays and assigns each ROI to one of eight clinical categories consistent with reporting guidelines from the RSNA.

For such cleared AI models to be the most beneficial, they should be able to detect multiple abnormalities, generalize to new patient populations, and enable clinical adoption across physician specialties, the authors suggested.

To that end, the researchers first assessed Chest-CAD’s standalone performance on a large curated dataset. Next, they assessed the model’s generalizability on separate publicly available data, and lastly evaluated radiologist and nonradiologist physician accuracy when unaided and aided by the AI system.

Standalone performance for identifying chest abnormalities was assessed on 20,000 adult cases chest x-ray cases from 12 healthcare centers in the U.S., a dataset independent from the dataset used to train the AI system. Relative to a reference standard defined by a panel of expert radiologists, the model demonstrated a high overall area under the curve (AUC) of 97.6%, a sensitivity 90.8%, and a specificity 88.7%, according to the results.

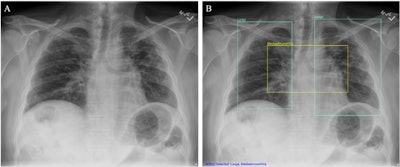

(A) Chest x-ray unaided by the AI system. (B) Chest x-ray aided by the AI system shows two Lung ROIs (green boxes) and a Mediastinum/Hila ROI (yellow box). The AI system identified abnormalities that were characterized as regions of interest in Lungs and Mediastinum/Hila. The abnormalities were bilateral upper lobe pulmonary fibrosis (categorized as ‘Lungs’), and pulmonary artery hypertension along with bilateral hilar retraction (categorized as ‘Mediastinum/Hila’). The ROIs for each category are illustrated in different colors for readability.Image available for republishing under Creative Commons license (CC BY 4.0 DEED, Attribution 4.0 International) and courtesy of Scientific Reports.

Next, on 1,000 cases from a publicly available National Institutes of Health dataset of chest x-rays called ChestX-ray8, the AI system demonstrated a high overall AUC 97.5%, a sensitivity of 90.7%, and a specificity of 88.7% in identifying chest abnormalities.

Finally, as expected, radiologists were more accurate than nonradiologist physicians at identifying abnormalities in chest x-rays when unaided by the AI system, the authors wrote.

However, radiologists still showed an improvement when assisted by the AI system with an unaided AUC of 86.5% and an aided AUC of 90%, according to the findings. Internal medicine physicians showed the largest improvement when assisted by the AI system with an unaided AUC of 80% and an aided AUC of 89.5%.

While there was a significant difference in AUCs between radiologists and non-radiologist physicians when unaided (p

“We showed that overall physician accuracy improved when aided by the AI system, and non-radiologist physicians were as accurate as radiologists in evaluating chest x-rays when aided by the AI system,” the group wrote.

Ultimately, due to a shortage of radiologists, especially in rural counties in the U.S., other physicians are increasingly being tasked with interpreting chest x-rays, despite lack of training, the team noted.

“Here, we provide evidence that the AI system aids nonradiologist physicians, which can lead to greater access to high-quality medical imaging interpretation,” it concluded.

The full study is available here.

Whether you are a professional looking for a new job or a representative of an organization who needs workforce solutions - we are here to help.