Radiologists and other physicians tend to trust AI more when the algorithms provide local rather than global explanations of findings on chest x-rays, suggests research published November 19 Radiology.

The study result is from work that simulated AI-assisted chest x-ray reads by 220 physicians (132 radiologists) who rated their confidence in the AI advice, noted senior author Paul Yi, MD, of St. Jude Children’s Research Hospital in Memphis, TN.

“When provided local explanations, both radiologists and non-radiologists in the study tended to trust the AI diagnosis more quickly, regardless of the accuracy of AI advice,” Yi said, in a news release from RSNA.

Clinicians have called for AI imaging tools to be transparent and interpretable, and there are two main categories of explanations that AI tools provide, Yi and colleagues explained: local explanations, where the AI highlights parts of the image deemed most important, and global explanations, where AI provides similar images from previous cases to show how it arrived at its decision.

Clinicians and AI developers disagree about the usefulness of these two AI explanation types, yet few studies have evaluated the interpretability and trust of the explanations among human participants, they added.

To explore the issue, the group asked 220 radiologists and internal medicine/emergency medicine physicians to read chest x-rays alongside AI advice. Each physician was tasked with evaluating eight chest x-ray cases alongside suggestions from a simulated AI assistant with diagnostic performance comparable to that of experts in the field.

For each case, participants were presented with the patient’s clinical history, the AI advice, and x-ray images, with AI providing either a correct or incorrect diagnosis with local or global explanations. The readers could accept, modify, or reject the AI suggestions, and were also asked to report their confidence level in the findings and impressions and to rank the usefulness of the advice.

“These local explanations directly guide the physician to the area of concern in real-time. In our study, the AI literally put a box around areas of pneumonia or other abnormalities," Ye said.

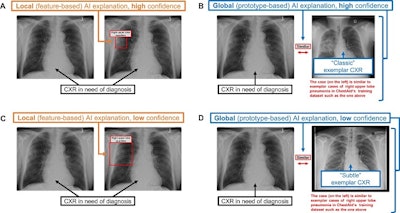

Chest x-ray examples of (A, C) local (feature-based) AI explanations and (B, D) global (prototype-based) AI explanations from a simulated AI tool, ChestAId, presented to physicians in the study. In all examples, the correct diagnostic impression for the radiograph case in question is “right upper lobe pneumonia,” and the corresponding AI advice is correct. The patient clinical information associated with this chest radiograph was “a 63-year-old male presenting to the emergency department with cough.” To better simulate a realistic AI system, explanation specificity was changed according to high (i.e., 80%−94%) or low (i.e., 65%–79%) AI confidence level: bounding boxes in high-confidence local AI explanations (example in A) were more precise than those in low-confidence ones (example in C); high-confidence global AI explanations (example in B) had more classic exemplar images than low-confidence ones (example in D), for which the exemplar images were more subtle.

Chest x-ray examples of (A, C) local (feature-based) AI explanations and (B, D) global (prototype-based) AI explanations from a simulated AI tool, ChestAId, presented to physicians in the study. In all examples, the correct diagnostic impression for the radiograph case in question is “right upper lobe pneumonia,” and the corresponding AI advice is correct. The patient clinical information associated with this chest radiograph was “a 63-year-old male presenting to the emergency department with cough.” To better simulate a realistic AI system, explanation specificity was changed according to high (i.e., 80%−94%) or low (i.e., 65%–79%) AI confidence level: bounding boxes in high-confidence local AI explanations (example in A) were more precise than those in low-confidence ones (example in C); high-confidence global AI explanations (example in B) had more classic exemplar images than low-confidence ones (example in D), for which the exemplar images were more subtle.

In the analysis, the researchers defined “simple trust” by how quickly the physicians agreed or disagreed with AI advice. The group used mixed-effects models in the analysis and controlled for factors like user demographics and professional experience.

According to the results, physicians were more likely to align their diagnostic decisions with AI advice and underwent a shorter period of consideration when AI provided local explanations. When the AI advice was correct, the average diagnostic accuracy among reviewers was 92.8% with local explanations and 85.3% with global explanations. When AI advice was incorrect, physician accuracy was 23.6% with local and 26.1% with global explanations.

“Compared with global AI explanations, local explanations yielded better physician diagnostic accuracy when the AI advice was correct,” Yi noted. “They also increased diagnostic efficiency overall by reducing the time spent considering AI advice.”

Ultimately, based on the study, Yi said that AI system developers should carefully consider how different forms of AI explanation might impact reliance on AI advice.

“I really think collaboration between industry and health care researchers is key. I hope this paper starts a dialog and fruitful future research collaborations,” Yi said.

The full study can be found here.

Whether you are a professional looking for a new job or a representative of an organization who needs workforce solutions - we are here to help.